We at Linpowave have a great deal of experience implementing sensing systems in industrial robotics, autonomous vehicles, and environmental monitoring applications. Real-world testing has shown us that many systems operate perfectly in controlled settings but face serious difficulties in harsh environments. Even though they are statistically rare, heavy rain, dense fog, intense glare, and complex multipath reflections frequently determine whether a system succeeds or fails. To ensure safety, dependability, and commercial viability, it is essential to comprehend and account for these edge cases.

Why Most Systems Don’t Fail Under “Normal” Conditions

Standardized testing conditions, such as 25°C, 50% humidity, and a clear line of sight, are frequently the focus of sensing system design. LiDAR, radar, cameras, and ultrasonic sensors all perform consistently in these circumstances. Real-world settings, however, are much more unpredictable. System accuracy can be significantly impacted by light levels, weather, airborne particles, reflective surfaces, and abrupt interference.

A common oversight is the "long-tail risk fallacy." Rare events are often overlooked during design and testing, but a single extreme event, such as a sudden dust storm or unexpected urban multipath, can cause system downtime, accidents, or reputational damage. Even systems that meet nominal performance metrics may reveal vulnerabilities in hardware, software, or integration when subjected to extreme conditions.

Extreme Environments That Are Often Underestimated

Atmospheric and Optical Interference

Among the biggest risks to sensor dependability are rain, fog, dust, and glare. LiDAR beams scatter during periods of heavy rainfall, resulting in false positives and a 30%–70% reduction in detection range. Visible light is scattered by fog, which lowers camera contrast and makes it harder to see important objects like pedestrians or traffic signs. Over time, dust buildup on lenses in desert or industrial settings can lower signal transmission by 20% to 50%.

Critical details like pedestrian outlines can be obscured by camera sensors that are overloaded by glare from sunlight or reflective surfaces like wet roads or glass buildings. These problems are real; field tests in autonomous car trials have shown that visual sensors malfunction in these circumstances, but radar still offers crucial situational awareness.

Multipath Reflections

Ghost signals or distorted measurements are the result of multipath reflection, which happens when sensor signals bounce off several surfaces before they reach the target. Inaccurate distance readings could result from radar mistaking reflected waves for actual objects. Time-of-flight errors can also occur with LiDAR and ultrasonic sensors. Multipath effects can result in catastrophic failures in high-precision applications like drone navigation, industrial robotics, or autonomous vehicles, even though they are occasionally regarded as minor interference in lab settings.

How Different Sensors Respond to Extreme Conditions

Cameras are especially vulnerable to dust, fog, and glare, which can cause low contrast, signal saturation, or lens obstruction. Failure in object recognition could result in misclassification or missed detections. Rain, fog, and snow scatter LiDAR beams, reducing their range and producing false positives or negatives.

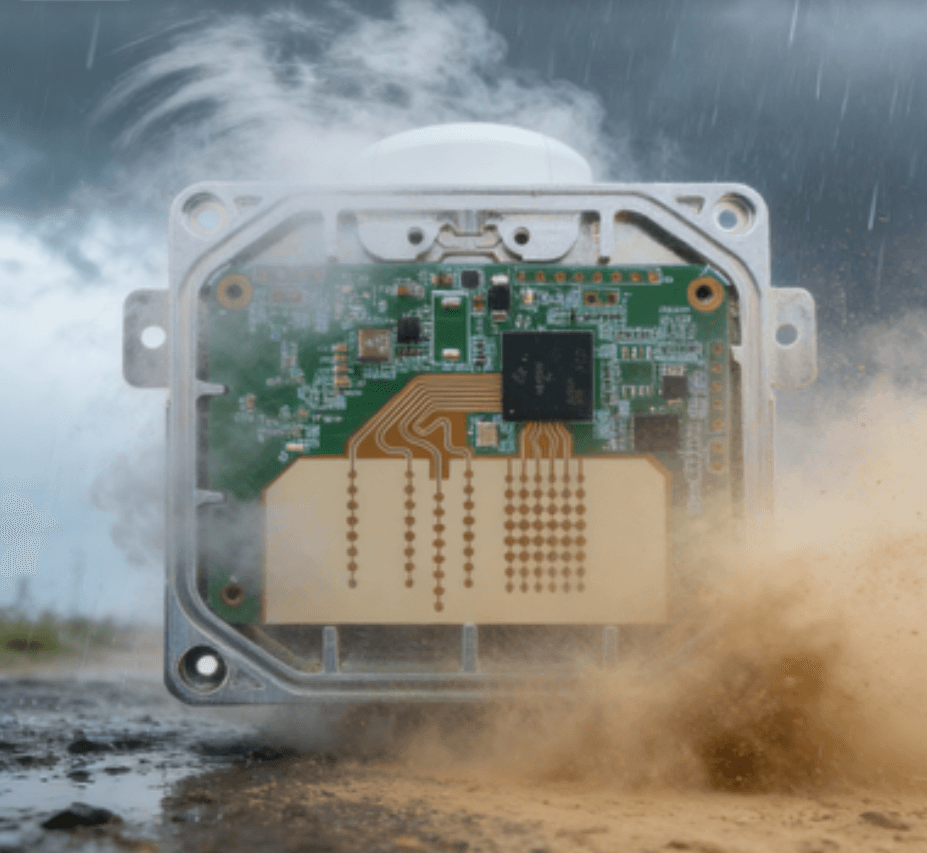

Although multipath reflections and heavy rain can still result in ghost targets or range errors, millimeter-wave radar is generally more resilient in bad weather. At Linpowave, our millimeter-wave radar products are designed to maintain performance under these conditions. They have been used in industrial automation projects, autonomous cars, and drones, exhibiting strong sensing abilities in low-light, foggy, and rainy conditions.

In dusty or humid environments, ultrasonic sensors may encounter dampened acoustic waves, which would shorten their detection range and slow their reaction time. Extreme heat or cold can cause temperature and humidity sensors to drift or malfunction.Instead of occurring at random, these failure modes are predictable and result from the physical constraints of each type of sensor.

Why Edge Cases Cannot Be Solved by Algorithms Alone

Some groups believe that sophisticated algorithms can make up for subpar edge-case design. However, this strategy is constrained by three factors. First, software cannot recover physical signal loss. For example, no algorithm can recover lost information if rain scatters 90% of LiDAR photons. Second, complex algorithms cannot ensure real-time responses to extreme events. Third, multipath reflections produce unclear information that could be misconstrued and lead to risky choices.

As a result, incorporating hardware, software, and environmental design considerations early on is necessary for true robustness.

Design Techniques That Are Proactive

Scenario-Driven Testing

Prior to deployment, test systems in simulated extreme environments, such as multipath testbeds, dust tunnels, or fog chambers, to find vulnerabilities.

Sensor Fusion

Individual weaknesses are lessened by combining complementary sensor technologies. Combining LiDAR, thermal cameras, and radar enables accurate perception in situations where a single sensor might not work. Linpowave’s millimeter-wave radar products are optimized for sensor fusion, providing stable performance even in dense fog or low-light scenarios.

Hardware Strengthening

To ensure dependability in challenging conditions, use materials and coatings that are resistant to water, dust, and glare, and choose sensors that are rated for broad temperature ranges.

Dynamic Adjustment of Parameters

To ensure consistent performance, use real-time environmental monitoring to modify LiDAR power, camera exposure, and other settings based on the conditions.

Redundancy as well as fault tolerance

Create backup plans or operate in degraded mode. To ensure safe operation, a drone navigating through fog might, for instance, switch to radar-only guidance.

Summarization

Edge cases are the ultimate test of a sensing system's resilience, not an exception. Algorithms cannot completely mitigate the effects of environmental extremes, which reveal the discrepancy between theoretical performance and real-world reliability. Engineers can build systems that function safely and dependably in even the most challenging circumstances by giving extreme-scenario design, thorough testing, sensor fusion, and robust hardware top priority. True success in sensing technology is determined by resilience on the most difficult days rather than performance on average days.