Introduction: There's a New Turning Point in Perception Technology

Traditional assisted driving is giving way to more sophisticated, well-coordinated autonomous driving systems. This shift creates new demands on perception, particularly on its capacity to provide a consistent, organized, and trustworthy comprehension of complex environments. Vision-only systems are still challenged by real-world road conditions like fog, rain, backlights at night, multilayer viaducts, and low-height static obstacles. Conventional 3D mmWave radar, on the other hand, has limitations as well because it cannot measure elevation.

In light of this, 4D mmWave radar is becoming a fundamental sensing technology that improves depth perception, fortifies spatial comprehension, and sustains reliable performance under a variety of environmental circumstances. It fills in the gaps left by deteriorating other sensors and offers high-value, structured data that is consistent with the most recent transformer and BEV-based perception models.

The technological significance of 4D mmWave radar, its function in next-generation sensor fusion systems, important application scenarios, and Linpowave's realistic deployment across various autonomous driving levels are all examined in this article.

4D mmWave Radar's Technological Advancement

Improved Spatial Representation for Accurate 3D Knowledge

Conventional millimeter-wave radar is limited in its ability to identify vertical structures because it usually outputs distance, velocity, and azimuth angle. Because of this, situations with ramps, viaducts, low-height obstacles, or multilayer traffic can be difficult.

By adding elevation (pitch angle), 4D mmWave radar broadens spatial sensing and allows for a more thorough 3D interpretation of the surroundings.

This improvement enables autonomous cars to distinguish between:

-

Traffic at elevated and ground levels

-

Traffic cones, roadside curbs, and other low-height items

-

Road topology and upward and downward slopes

Planning and control modules receive higher-quality input from more structured data, which leads to more stable and predictable vehicle behavior in a variety of situations.

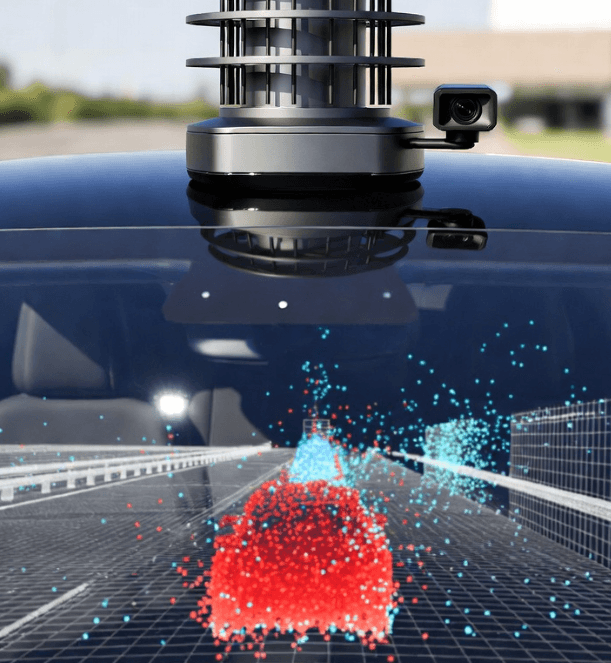

Richer Geometric Expression through High-Density Point Cloud

4D mmWave radar produces dense, organized point clouds with the help of sophisticated MIMO antenna arrays and streamlined signal processing pipelines. These outputs provide enough spatial detail to describe object contours, shapes, and motion patterns, even though they are not as dense as LiDAR.

This degree of geometric richness encourages:

-

More trustworthy semantic comprehension and environment modeling

-

Consistent performance in low-light or weather conditions

-

Enhanced depth priors for deep learning models based on BEVs

4D radar can continue to deliver reliable, useful data in situations where visual systems deteriorate, such as heavy rain or backlight.

4D Radar's Growing Significance in Multi-Sensor Fusion

"Core Sensor" as opposed to "Supporting Sensor"

In the past, mmWave radar mainly functioned as a supporting sensor, offering supplementary velocity and distance information to support vision. 4D radar is becoming more and more essential to perception stacks as the industry shifts to L2++ and L3, which increases the need for redundancy and structured depth sensing.

Fusion is more coherent and simpler to optimize because of its point cloud format, which naturally aligns with vision depth maps and BEV model inputs. 4D radar greatly improves the system's generalization capabilities in challenging long-tail situations, like foggy intersections or nighttime highway merges.

Fusion Development: Transitioning from Rules-Based to Model-Based

Instead of relying on manually created rules, modern AV stacks jointly learn multi-modal features using neural networks.

The contribution of 4D radar to these model-based fusion architectures is:

-

Measurements of stable speed and range

-

Sturdy geometry independent of lighting

-

A structured point cloud that is appropriate for deep fusion

These traits usually lead to a higher modality weight during training, which enhances the consistency and dependability of perception outputs.

Important 4D Radar Application Scenarios

Difficult and Severe Weather

Heavy fog, nighttime backlight, and rain reflections can all cause vision systems to deteriorate.

Continuous perception in low visibility conditions is made possible by 4D radar's ability to maintain comparatively stable point cloud outputs.

High-Speed Perception of Forward

Predictive decision-making in highway scenarios requires precise long-range detection and early trajectory estimation.

4D radar is excellent at:

-

Capturing distant, tiny objects

-

Providing smooth and constant velocity data

-

Encouraging early warning during fast maneuvers

This makes it especially useful for highway-assisted driving and NOA.

Low-Speed and Close-Range City Situations

Accurate detection of static obstacles and near-range details is necessary for parking, narrow road navigation, and heavy traffic assistance.

Stable and organized geometric data provided by 4D radar improves:

-

Curb identification

-

Identifying objects at low heights

-

Predictability in situations with complexity and slow speed

The 4D Radar Technology and Deployment of Linpowave

A Comprehensive Product Portfolio for Covering Multiple Scenarios

Linpowave offers a wide range of 4D radar products suited for various driving scenarios thanks to its strong expertise in MIMO antenna design, point cloud modeling, and signal processing pipelines.

V300 Series: Perception of Long and Mid-Range

The V300, which is intended for front and side sensing, uses an Ethernet interface to output high-density structured point clouds. It easily interfaces with vision for multi-sensor fusion and supports L2++ and L3 systems that need high-bandwidth environmental data.

Mid and Short-Range Urban Sensing with the U300 Series

The U300 offers reliable detection of small, stationary, or close-range objects in low-speed, near-range situations like parking or traffic jam assistance (TJA). This enhances vehicle control in confined spaces, tight spaces, and crowded cities.

Linpowave facilitates effective algorithm development and seamless integration with current fusion frameworks by providing developers with SDKs, clear point cloud documentation, APIs, and toolchains.

Industry Outlook: Fusion-Ready, Cost-Optimized, and Scalable

4D mmWave radar is becoming more widely used in mass-market vehicles as radar chipsets advance and the cost structure gets better. It is positioned as a crucial sensing technology for upcoming L2++ to L3 architectures due to its cost-effectiveness, robustness, and performance balance.

4D radar is expected to continue to be a crucial component of next-generation autonomous driving systems due to the growing emphasis on stability and structured depth.

FAQs

Can LiDAR be replaced by 4D mmWave radar?

LiDAR and 4D radar work well together. While LiDAR adoption is dependent on system requirements and budget, Vision + 4D radar is emerging as a popular and economical mainstream architecture.

How does 4D radar function in inclement weather?

When it comes to rain, fog, or low light, 4D radar typically maintains more stable sensing than vision. Scenario and configuration have an impact on performance.

Does Linpowave offer assistance for development?

Sure. To help with the development of fusion models, Linpowave provides comprehensive point cloud documentation, SDKs, APIs, and toolchains.

Is 4D radar appropriate for both urban and highway roads?

Sure. While the U300 series performs well in near-range, urban, and low-speed situations, the V300 series is best suited for long-range, high-speed perception.