Autonomous AI systems, like self-driving cars, delivery drones, or industrial robots, operate without direct human supervision. Engineers add millimeter-wave (mmWave) radar to these systems because it measures objects, distance, and speed—even in fog, rain, or dust. Radar improves the system's awareness, but it also introduces new points where attacks or failures may occur. Organizations use red-teaming to simulate attacks and test these systems. Red-teaming helps find weaknesses before they lead to accidents, financial loss, or operational failures.

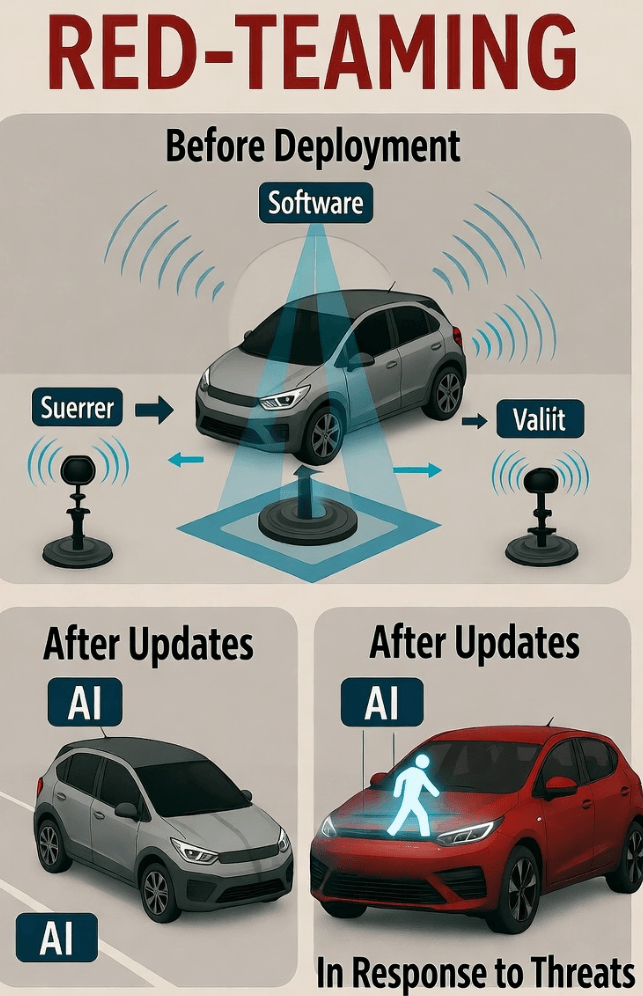

Red-teaming is most critical at three times: before deployment, after major updates or environmental changes, and following incidents or emerging threats. Combining AI testing with radar-specific scenarios ensures systems operate safely and reliably.

Before Deployment or Public Release

Companies must ensure that AI and radar sensors work together correctly before public use. While mmWave radar can detect objects under many conditions, attackers may trick it or block its signals.

Understanding Radar Weaknesses

A self-driving car might mistake a radar-reflective metal sheet for a real obstacle. The car could brake suddenly or swerve unexpectedly. A drone might navigate incorrectly if radio-frequency signals interfere with radar. These mistakes can happen even when the AI model appears correct under normal testing conditions.

Red-Teaming Approaches

Teams can simulate such risks by combining manipulated radar signals with adversarial AI inputs. They can test unusual scenarios, such as moving obstacles or environmental interference. Engineers, security specialists, and ethicists working together can observe how the system reacts in complex situations. Red-teaming at this stage helps organizations fix problems before letting the system reach public use, thereby reducing the risk of accidents or costly recalls.

After Significant Updates or Environmental Changes

Autonomous AI systems evolve over time. Updates like adding higher-resolution radar sensors, improving signal-processing algorithms, or expanding operating areas may introduce new risks. For example, a car equipped with a new radar might misread small obstacles if the AI was not retrained. New urban streets or traffic patterns may expose radar blind spots.

Red-Teaming Strategies After Updates

Red-teaming after updates should focus on altered components under realistic conditions. Teams simulate reflective objects, radio interference, or blocked sensors. They also test scenarios such as heavy rain, multi-path reflections, or temporary sensor occlusion. Observing how AI decisions interact with radar inputs ensures that updates do not create hidden weaknesses.

Benefits of Post-Update Testing

Post-update red-teaming provides feedback that helps improve sensor calibration, AI models, and decision-making logic. It creates a feedback loop: testing reveals flaws, engineers correct them, and the system becomes safer and more reliable.

After Incidents or Emerging Threats

Emerging threats such as radar jamming, spoofing, or adversarial AI attacks demand immediate attention. For instance, a radar-equipped drone might fly off course due to false reflections. A near-miss could occur if radar misperceives an obstacle and the AI misjudges the response.

Red-Teaming Response to Incidents

Teams can recreate incidents in a controlled environment by manipulating radar signals and AI inputs. They must also monitor industry trends and new attack methods, for example using the MITRE ATLAS framework. Regular testing, such as every three months for high-risk systems, helps maintain system resilience. Following standards like ISO/SAE 21434 ensures radar and AI systems meet safety and cybersecurity requirements.

Learning from Incidents

Red-teaming after incidents improves understanding of real-world risks. Teams analyze logs, measure response times, and see how AI and radar interact. Lessons from these tests guide software updates and hardware design, reducing the chance of repeated failures.

Best Practices for Red-Teaming Radar-Enhanced AI

Use Multiple Scenarios

Teams should test physical, digital, and environmental challenges together to uncover hidden weaknesses.

Cross-Functional Collaboration

Including engineers, security experts, ethicists, and system operators provides different perspectives that improve testing quality.

Testing at Different Levels

Red-teaming should cover unit-level AI tests, system-level radar tests, and full integration tests.

Documentation and Regular Testing

Teams must document findings, fixes, and lessons learned. Continuous testing is necessary because risks evolve with updates and new threats.

Final Thoughts

Integrating mmWave radar with autonomous AI systems offers measurable benefits: enhanced perception, improved decision-making, and operational safety. But it also requires careful red-teaming at key moments—before deployment, after updates, and after incidents—to uncover vulnerabilities, validate system updates, and address new threats.

By combining AI red-teaming with radar-specific tests, organizations can develop autonomous systems that are not only intelligent but also resilient and trustworthy. Continuous monitoring, testing, and proactive defence strategies ensure these systems operate safely and reliably in real-world conditions.

For practical guidance, you may explore Linpowave radar applications or consult Secure My ORG for cybersecurity and red-teaming services.

FAQ: Red-Teaming Autonomous AI with mmWave Radar

Q1: What is mmWave radar and why use it?

A: mmWave radar sends high-frequency waves to measure objects, distance, and speed. It works in rain, fog, low light, or dust. Using it with AI improves situational awareness for cars, drones, and robots.

Q2: How can radar be attacked?

A: Attackers may use reflective surfaces, radio interference, or blocking objects to trick radar. Such attacks can make AI see false obstacles or miss real ones.

Q3: What is radar-specific red-teaming?

A: Radar-specific red-teaming tests AI systems under simulated radar faults. Teams may simulate moving or reflective objects, introduce jamming signals, or combine radar attacks with AI input manipulations.

Q4: How often should red-teaming happen?

A: Organizations should test before deployment, after major updates, and after incidents or new threats. Systems in high-risk domains should conduct red-teaming every three months.

Q5: What standards or tools support red-teaming for radar-equipped AI systems?

A: Useful standards and tools include the MITRE ATLAS framework and ISO/SAE 21434 for automotive cybersecurity.

Q6: Can red-teaming remove all risks?

A: No. Red-teaming identifies weak spots and strengthens systems, but organizations must continue monitoring, testing, and improving defences to handle new threats.